BEFORE GETTING THAT GADGET FOR YOUR TEENAGER, CONSIDER THESE FIRST!

The moment a parent hands a teenager their first serious gadget often feels bigger than it looks. It is not just a phone or a laptop. It is a quiet transition. A step toward independence. A signal of trust. For many families, this moment comes with excitement, hesitation, and a long list of questions that rarely have simple answers.

Teenagers today live in a world where technology is everywhere. School assignments are submitted online. Friendships are maintained through messages and social platforms. Information is available at the tap of a screen. It is no surprise that many parents feel pressure to buy devices earlier than they planned, especially when everyone around them seems to be doing the same. But giving a teenager a gadget is not a decision to rush. It deserves thought, conversation, and clarity.

Before any device changes hands, one question matters more than the brand or the model. Is this teenager ready? Readiness has very little to do with age and everything to do with maturity. Some teenagers can manage screen time, respect boundaries, and communicate openly about what they encounter online. Others may still struggle with impulse control or emotional regulation. A device connected to the internet opens doors to learning and creativity, but it also opens doors to content, conversations, and pressures that can be overwhelming without guidance.

Many parents underestimate how quickly a gadget becomes part of a teenager’s emotional world. It can shift routines, affect sleep, change attention spans, and influence self-esteem. Once exposure begins, it is difficult to reverse. That is why it is important for parents to slow down and consider not just what their teenager wants, but what they truly need at this stage of development.

Clear rules are another part of the conversation that cannot be skipped. Devices without boundaries often create confusion and conflict. Teenagers need structure, even when they push against it. Talking openly about screen time, online safety, social media behavior, and consequences builds trust and prevents misunderstandings. When expectations are clear from the beginning, teenagers are more likely to use their devices responsibly.

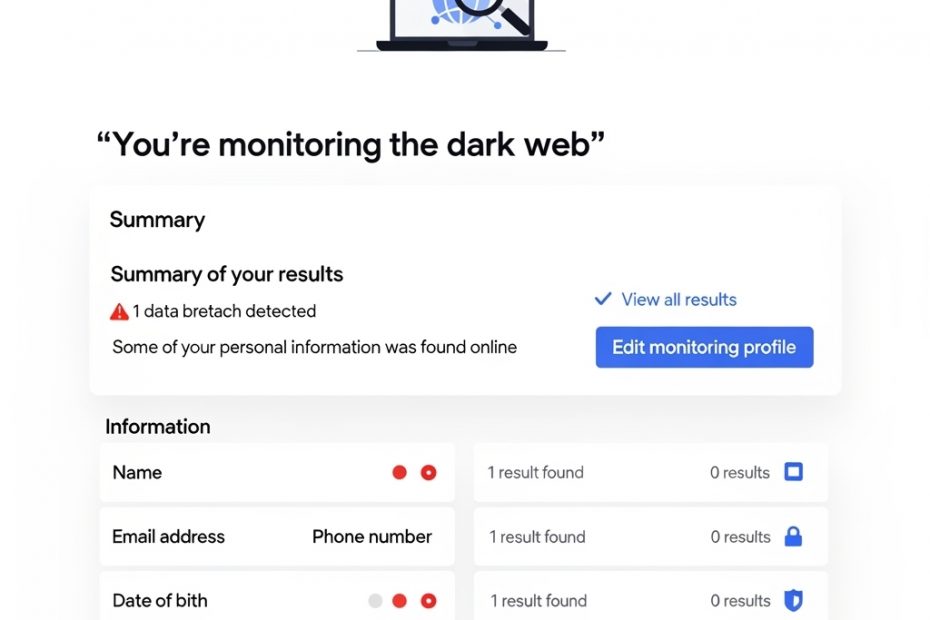

Parental involvement does not end once the device is handed over. Monitoring, guidance, and regular check-ins are essential. This is not about control or surveillance. It is about protection and partnership. Teenagers are learning how to navigate a digital world that even adults are still figuring out. They need support, not silence.

Finally, gadgets can be powerful tools for building responsibility when used intentionally. Involving teenagers in decisions about data usage, care of the device, and balanced routines teaches accountability. Encouraging offline activities, face-to-face relationships, and downtime reminds them that technology is a tool, not a replacement for real life.

Giving a teenager a gadget is not just a purchase. It is a parenting decision that shapes habits, values, and trust. When handled thoughtfully, it can become a positive step forward rather than a source of regret.